Tuning Go Application, which has GC issues with a few steps

Lessons Learned in Performance Tuning and Garbage Collection

Motivation

I am working on a project that pools the messages from Kafka and writes them to Google Cloud Storage. When I compared it to the existing solution Confluent Google Cloud Sink Connector under the same environment (millions of messages topic, 50m+), it could not perform well 😔. It finishes one hour after the connector finishes. When I tried to investigate why it has such latency, I met some GC issues on our app. I wanted to share my investigation and implementation. As a result of the journey, we didn’t fall behind; we finished 10 minutes before.

During this journey writing benchmark tests and reading pprof results is important because premature optimization is the root of all evil, if you don’t know well, you can take a look at my pprof article.

Little Bit Stack & Heap Allocations

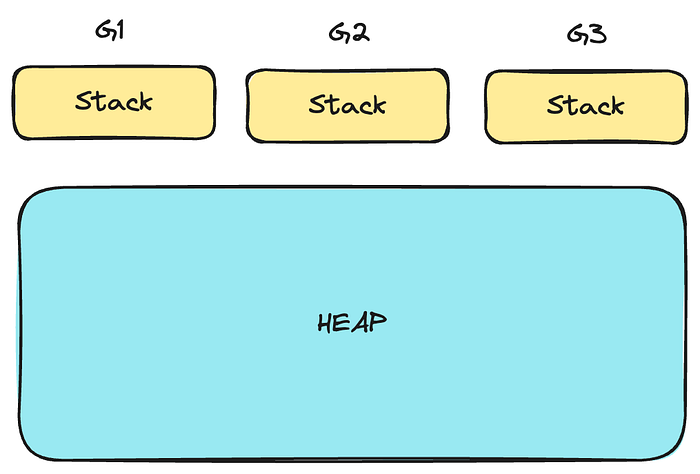

In Go, memory allocation happens in one of two places: the stack or the heap. Each goroutine has its own stack, which is a continuous block of memory. In contrast, the heap is a large shared memory area accessible by all goroutines. The diagram below illustrates this concept.

The stack is self-managing and used exclusively by a single goroutine. In contrast, the heap relies on the garbage collector (GC) for cleanup. Increased heap allocations put more pressure on the GC. When the GC runs, it consumes 25% of the available CPU resources and can introduce “stop-the-world” latency, during which the application is momentarily paused for several milliseconds.

In general, the cost of garbage collection is directly proportional to the volume of heap allocations made by your program.

According to our experience, here is our list, for reducing heap 👇

- Using sync.Pool (for reusing objects instead of initializing every time). Be careful about the implementation, misimplementation causes more harm than benefit.

- Prefer strings.Builder over + concentration

- Try to preallocate slices and maps if their size can be known

- Reduce pointer usage

- Try to avoid large local variables in a function

- Observe compiler decisions via

go build -gcflag="-m" ./...output. Take a look at inlining optimisations. (related -l flag) - Struct data alignment technique, use fieldalignment linter

You can also take a look at the great resources below. 👇

- Go Profiler Notes

- How do I know whether a variable is allocated on the heap or the stack?

- Understanding allocations in a go article

- 100 Go Mistakes book

Changing json library

- We used the bytedance/sonic instead of the encoding/json library for serialization and deserialization with the size of ~1.50KB objects. Local load test (100k Kafka messages), pprof results (from 2.55s to 0.68s) 👇

By changing your json library, you gain speed and performance but sacrifice stability, that’s the tradeoff. encoding/json is a more suitable one in comparison to others.

GOGC and GOMEMLIMIT Tuning

- GOGC (Garbage Collection target percentage) is an environment variable in Go that controls the garbage collector’s aggressiveness by specifying the target heap growth rate. For example, GOGC=100 means the heap can grow by 100% before triggering garbage collection, while lower values result in more frequent collections but smaller memory usage.

- GOMEMLIMIT is an environment variable introduced in Go 1.19 that sets a soft limit on memory usage for the Go runtime. When the total memory used by the program approaches this limit, the garbage collector becomes more aggressive in staying within the defined budget, helping manage memory in memory-constrained environments.

You can take a look at this great presentation and the GC guide article for more detail.

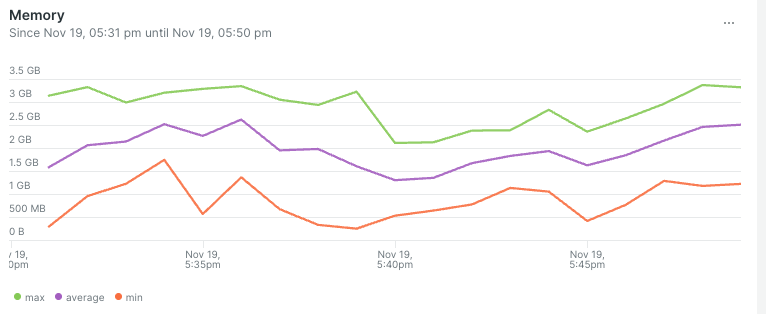

We used GOGC=off and GOMEMLIMIT=90% of cgroup’s memory limit by using the automemlimit library. Setting GOGC=off disables regular garbage collection based on heap growth, and GC only runs when memory usage approaches the GOMEMLIMIT, which acts as a soft limit for the heap.

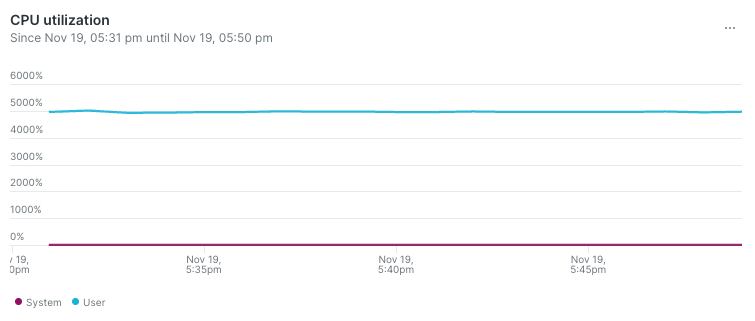

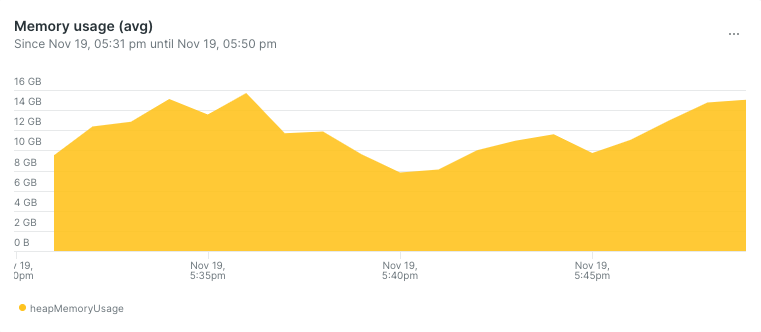

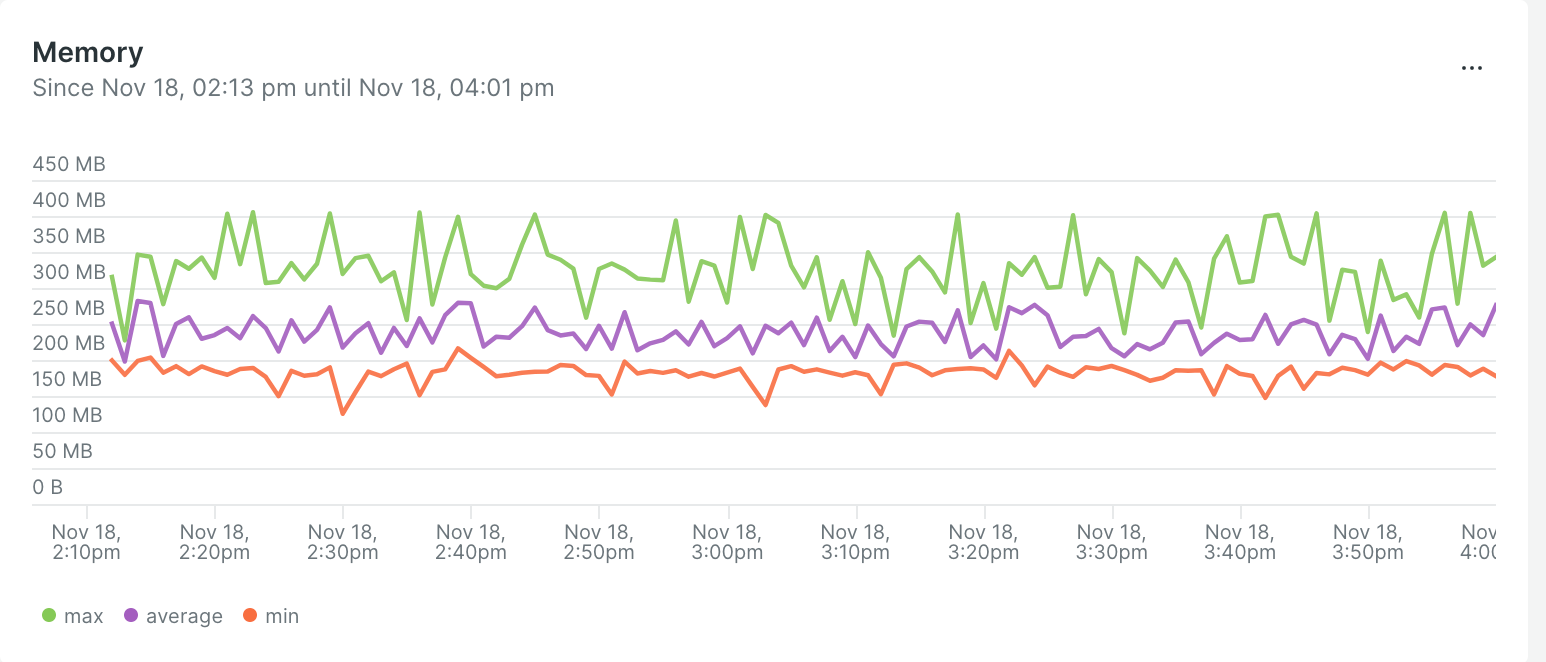

Our app’s mem and CPU as shown below, (ref)

resources:

limits:

cpu: '2'

memory: 4Gi

requests:

cpu: '1'

memory: 2GiResults

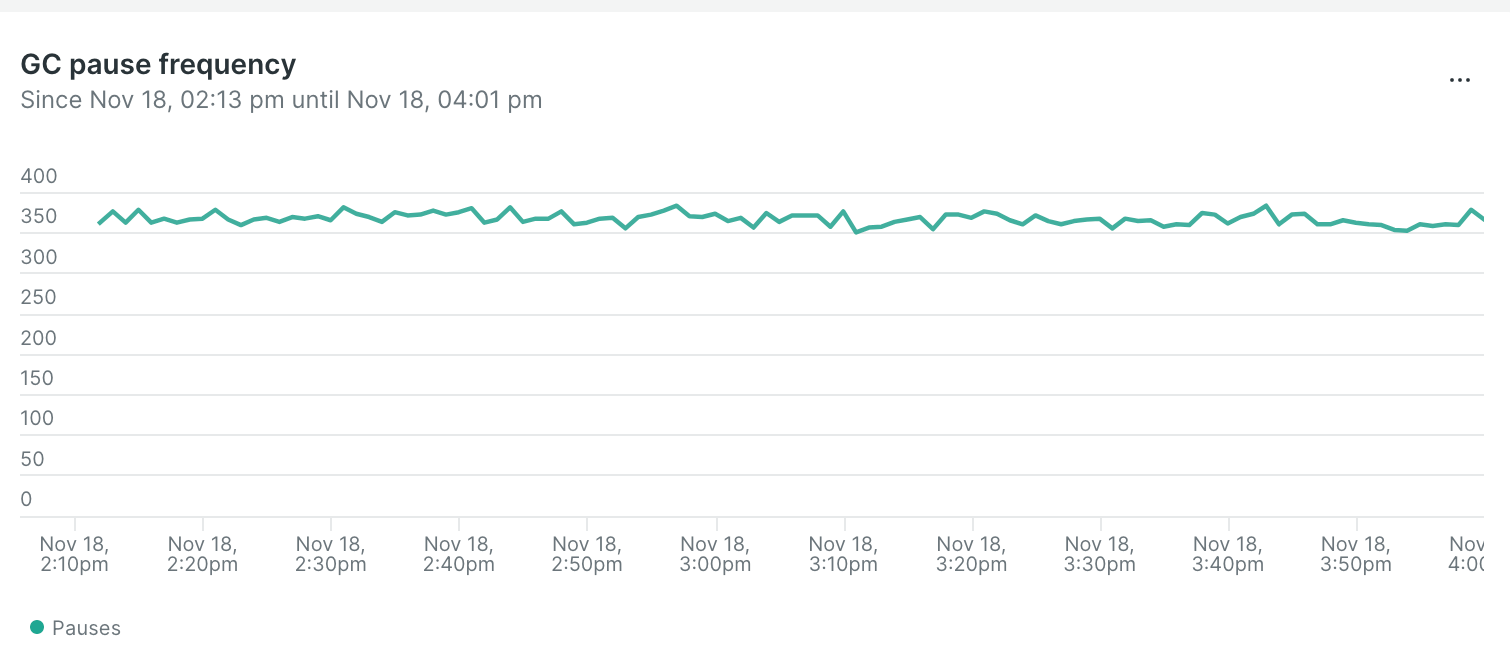

- GC Pause Frequency is decreased from 350 to 30. (calls per minute)

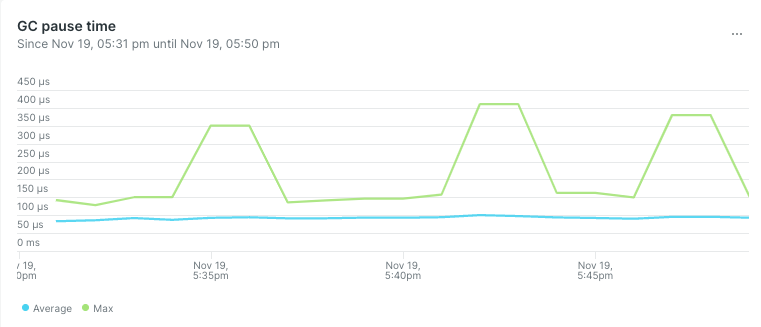

- GC Pause Time is decreased from 40ms to 400µs at peak.

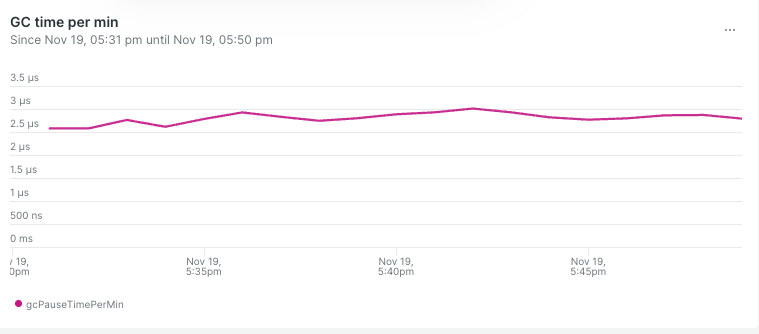

- GC Time Per Min is decreased from 60µs to 2.5µs

- CPU utilization is increased from %3000 to %5000.

- Our total memory usage is increased (we allowed that for no aggressive GC pressure)

Yes, we allocated more memories but reduced overall GC & CPU time.🚀

Thank you for reading so far 💛. All feedback is welcome 🙏

We are still trying to optimize our app; this is not the end, but the beginning 😃 💃

I also thank Emre Odabas and Mehmet Sezer for supporting me on this journey. 💪

Further Reading

- https://blog.twitch.tv/en/2019/04/10/go-memory-ballast-how-i-learnt-to-stop-worrying-and-love-the-heap/

- https://github.com/uber-go/automaxprocs (creates thread with a number of k8s core CPU limit, not node cpu limit.)